Artificial intelligence systems no longer live only in research labs. They power search engines, recommend movies, approve loans, and help doctors spot diseases. But when an AI model drifts off course, customers can get bad answers, and trust can slip away. These changes are often small and depend on the situation, such as drifting from the training data, hallucinating answers, or producing unsafe outputs when users interact with the system in real time. As generative AI and large language models enter customer-facing and high-risk workflows, teams require more than offline evaluation; they necessitate continuous, production-aware visibility.

AI observability is the practice of understanding and safeguarding how AI systems behave in real-world production environments, so issues can be detected, mitigated, or prevented before they impact users, trust, or the business.

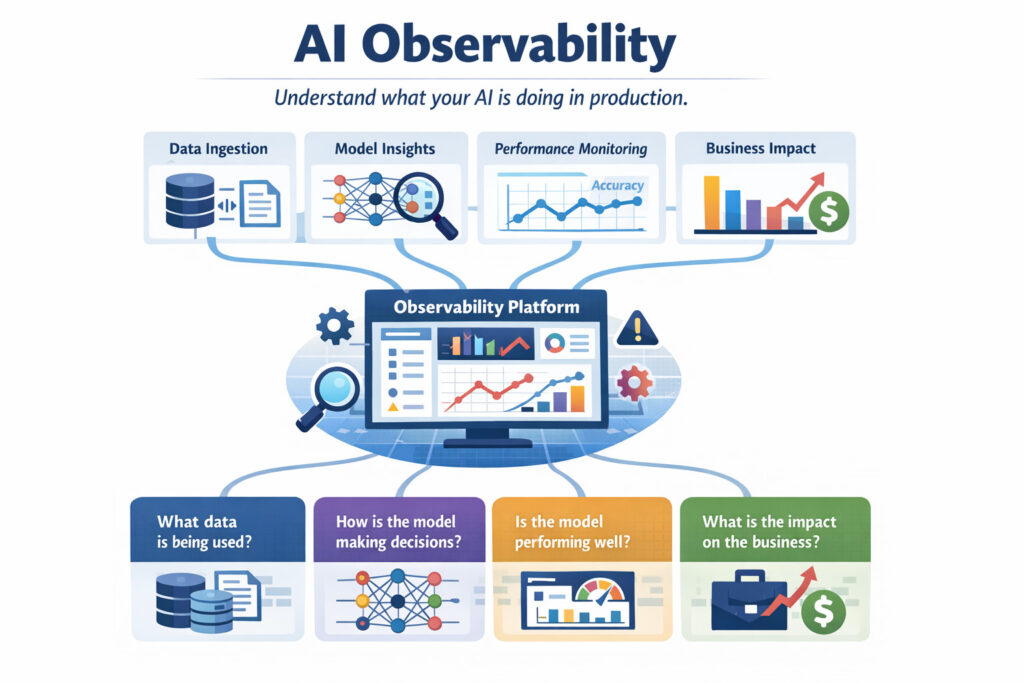

What Is AI Observability?

AI observability is the ability to understand what an AI system is doing, why it is doing it, and whether it is safe and effective to do so, while the system is running in production. It extends traditional observability beyond infrastructure and APIs to include data inputs, model behavior, outputs, and real-world impact.

In the past, AI model observability was mostly about monitoring statistical metrics, such as accuracy, latency, drift, and resource usage. But modern AI systems, such as LLMs and generative AI, make things more difficult. Their outputs are non-deterministic, highly context-sensitive, and often directly consumed by users. As a result, AI observability today must also capture prompts, responses, user intent, retrieval context, and safety signals.

Platforms such as Hud.io emphasize production-safe AI observability for software and AI-assisted workflows, combining real-time contextual awareness from production with model- and code-behavior signals so teams can understand not just whether a model or AI-generated output is working, but also whether it should be allowed to act in a given moment.

How AI Observability Works in Modern Systems

Modern ML stacks are built from many moving parts: feature stores, model serving layers, data pipelines, and monitoring dashboards. AI observability ties these parts together through four basic stages:

- Collection: In generative AI systems, collection also includes prompts, responses, embeddings, retrieved documents (for RAG pipelines), user session context, and intermediate agent steps. Capturing this production context is critical for understanding why a model behaved a certain way.

- Aggregation & Storage: A scalable time‑series or columnar database stores the collected data with rich metadata (model version, user segment, feature name, etc.).

- Analysis & Alerting: For LLM-based systems, analysis goes beyond statistical thresholds. AI observability tools evaluate semantic drift, hallucination risk, policy violations, toxicity, and other safety indicators. Alerts may trigger not only notifications but also automated guardrails, such as blocking unsafe outputs or routing responses for human review.

- Root‑Cause & Remediation: Drill-down views let teams examine a problem by feature, geography, or time window to determine why it started. After that, they can retrain the model, go back to an older, safer version, or change the data pipelines.

The best AI observability tools integrate with continuous‑integration (CI) and MLOps workflows, so fixes move from detection to deployment automatically.

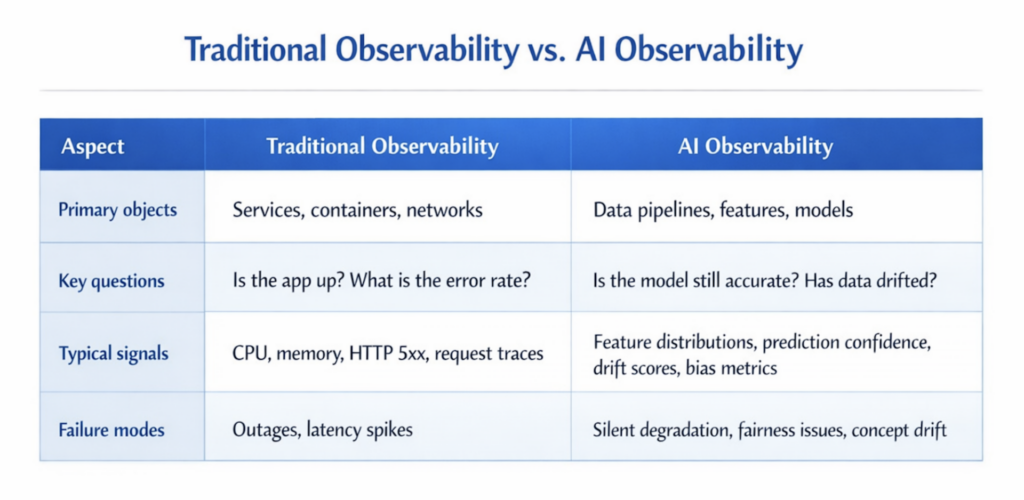

AI Observability vs. Traditional Observability

Traditional observability watches servers, databases, and APIs. It answers “What broke and where?” based on 3 pillars: logs, metrics, and traces. AI observability adds an extra dimension: Data and model quality.

Generative AI makes the gap even bigger. Traditional observability assumes systems always work the same way, but AI observability (especially for LLMs) must account for randomness, natural-language outputs, and each user’s context. Teams shouldn’t just ask, “Did the service respond?” They should also ask, “Was this response correct, appropriate, and safe in this situation?” This change means that AI that is aware of production is no longer a nice-to-have; it is a must-have.

Key Components of AI Model Observability

Data Drift Monitoring

Tracks how the statistical properties of live data differ from training data. Sudden changes in user behavior, seasonality, or upstream data sources can all trigger drift.

Concept Drift Detection

Even when the input data looks similar, the way it maps to the correct answer can change ( new fraud tactics). AI observability checks if that input-to-output relationship shifts, so you can retrain the model before accuracy falls.

Performance & Accuracy Metrics

When ground-truth labels are available, observability platforms compute metrics such as precision, recall, ROC‑AUC, mean absolute error, and F1 score. Observability platforms compute them continuously and compare them to service‑level objectives (SLOs).

Fairness & Bias Audits

Automatic slices by demographic attributes reveal whether certain groups get systematically worse predictions. Alerts fire when gaps exceed policy thresholds.

Explainability & Feature Attribution

Tools such as SHAP and integrated gradients can show which features contributed to each prediction. This helps fix outputs that aren’t what you expected and builds user trust.

Prompt & Response Monitoring

Keeps track of how changes in prompts, system instructions, and user context affect model outputs. This is necessary to determine why LLMs are behaving strangely and to maintain consistent response quality in production.

Context & Retrieval Awareness (RAG Observability)

For retrieval-augmented generation systems, observability means keeping track of which documents affected a response and whether the retrieved context was useful, out of date, or misleading.

Human-in-the-Loop Controls

High-risk predictions or uncertain generative responses can be flagged for human approval. This ensures AI systems remain production-safe even as they evolve.

Why AI Observability Matters

Without AI observability, failures often don’t show up until users are affected, like when they make the wrong choice, get unsafe results, or lose trust. As companies use AI more in customer-facing and self-driving workflows, observability serves as a safety net, enabling teams to ship faster without losing control. Teams can find problems early, stop bad behavior, and run AI at scale with confidence by using modern AI observability tools and production-aware AI model observability.

FAQs

What is the main goal of AI observability?

The main goal of AI observability is to let teams see how AI systems behave in production, in real time. AI observability helps teams identify unsafe, incorrect, or unexpected behavior early by analyzing data quality, model performance, system signals, and execution context. This way, it doesn’t affect users, revenue, or compliance.

How does AI observability help detect model drift?

Observability platforms examine how live feature distributions and prediction outcomes compare with historical baselines. Statistical tests show big changes, and visual dashboards show which variables changed. This early warning gives engineers time to retrain or recalibrate models before they become noticeably less accurate.

What data does an AI observability platform monitor?

A robust platform ingests raw inputs (features), model outputs (scores or classes), ground‑truth labels when available, system logs, resource metrics, and contextual metadata such as model version or user segment. Together, these signals create a full picture of model health.

How do AI observability tools support faster incident resolution?

Tools pair automated alerts with drill‑down analytics. When an anomaly fires, built‑in root‑cause explorers let teams slice by feature, cohort, or time range in seconds. Tight integrations with ticketing systems and CI/CD pipelines then speed fixes from notebook to production release.