Intro

LLMs can write code at scale, but until now they’ve been coding in the dark – cut off from the one thing that matters most: how software actually runs in production.

LLMs are now helping build real software, but there’s a critical gap in their understanding. Without production context, they write code that often doesn’t work in the real world. They can’t see broken flows, performance bottlenecks, or cascading failures from external dependencies – just to name a few of the reasons systems fail at scale.

This limitation has been a fundamental barrier to truly effective AI-assisted development. Until now.

The Production Context Missing Link

Traditional AI coding assistants work with static codebases – they were trained on huge amounts of code-at-rest, and use what’s in the repository at a given moment as context. But production systems are dynamic environments where code behavior can differ dramatically from its static representation – and real time changes often carry important signals.

Consider these real-world scenarios:

- A function that works perfectly in development but fails under production load

- External API dependencies that intermittently timeout

- Database queries that perform well with test data but slow down with real user data

- Memory leaks that only manifest after hours of continuous operation in a specific configuratio

AI agents without access to this runtime context can only make educated guesses, leading to solutions that may not address the actual problems.

The Hud Difference

Hud is fundamentally different from traditional observability solutions. It runs with your service, capturing function-level behavior automatically – no configuration, instrumentation, added logs, dashboards, or maintenance needed.

This approach provides a continuous stream of production context that AI agents can leverage to make informed decisions about code generation and optimization.

MCP Integration: Bridging the Gap

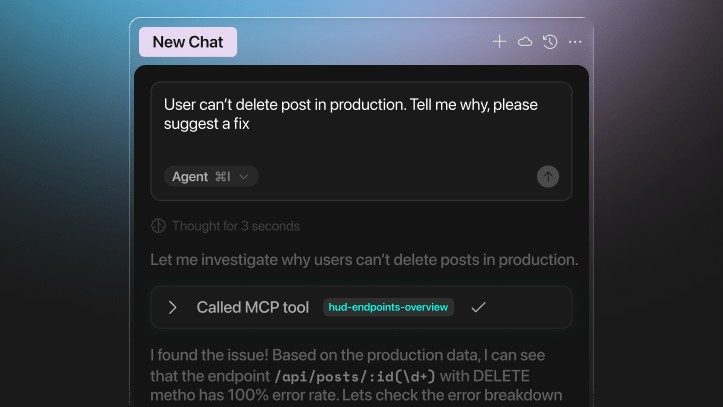

Hud’s MCP server provides a standardized way for AI agents to access real-time function-level context. Integrating it enables agentic AI environments like Cursor, Windsurf, and GitHub Copilot to reason over the actual runtime behavior of their code.

How It Works

The integration is simple:

- **Install Hud** in less than a minute

- **Connect your AI environment** by installing Hud’s MCP server

- **Start coding** with production-aware AI assistance

The AI agent now has access to:

- Real-time function performance metrics

- Error patterns and propagation paths

- Usage frequency and patterns

- External dependency health

- Business impact of potential changes

What Production-Aware AI Looks Like

With production context, AI agents can:

- Generate more reliable code: Based on actual runtime behavior rather than assumptions

- Identify performance issues: Before they become problems

- Suggest optimizations: That consider real-world usage patterns

- Avoid common pitfalls: By understanding how similar changes have performed in production

The Impact on Development Workflow

The difference is profound. Instead of generating code in isolation, AI agents can now consider:

- How functions perform under load

- Which patterns have proven successful in your specific environment

- What changes might impact critical user flows and how

- Whether proposed solutions align with observed production patterns

This transforms the production-readiness of AI-assisted development from a guessing game into a data-driven process.

Why Context Matters

Generating code without production context now feels reckless. It’s like driving with a blindfold – you might get where you’re going, but you’re much more likely to hit obstacles along the way.

With production context, AI agents can:

- Make informed architectural decisions: based on how similar patterns perform

- Optimize for real usage: rather than theoretical scenarios

- Avoid performance regressions: by understanding current bottlenecks

- Consider business impact when suggesting changes

The Future of AI-Assisted Development

This integration represents a fundamental shift in how we think about AI coding assistants. It’s not just about generating code faster – it’s about generating better code that works in the real world.

The most effective AI development environments will be those that can access and process real-time production data. This requires not just better models, but better context-systems that continuously capture and provide the information agents need to make intelligent decisions.

—

Ready to see what production-aware AI looks like? The future of AI-assisted development is here, and it’s powered by real production context.